I had been asked to compile a video from two different sources for a sports event.

Blender to the rescue and after about 24hrs I had a 15 minute video. Not very efficient.

Then I had the sources updated and I tried again. This time I knew what I was doing but the Rendering was still taking many hours.

When I was asked for a third time I took step back and reviewed what I was doing;

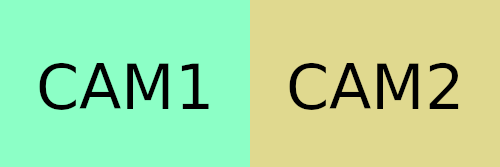

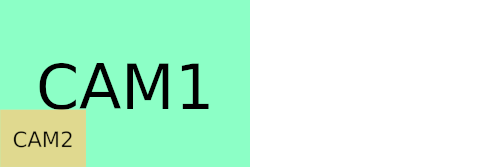

- Scale camera 2 footage for a Picture-in-Picture look

- Overlay that on top of the main video

- Somewhere in between those, offset the start of camera 2 to synchronise with the action from camera 1

- Overlay an information screen at different time indexes

FFmpeg is incredibly powerful, but also a bit of a black box, requiring strange incantations. However there is a lot of people talking about it on the various StackOverflow sites and I managed to piece the following together.

ffmpeg -i camera2.mp4 -vf scale=384:216 camera2_scaled.mp4

Scales the camera2.mp4 to 384x216, which is just about right for the PiP overlay to not occlude any of the action.

ffmpeg -i camera2.mp4 -ss 00:00:00.75 camera2_offset.mp4

Creates a video that skips the first .75 seconds, offsetting it by that amount. That tiny amount was almost imperceptible when combining the two videos at the beginning, but by the end the synchronisation was way off and very annoying.

FFmpeg being a typical

linux application can combine these.

ffmpeg -i camera2.mp4 -ss 00:00:00.75 -vf scale=384:216 camera2_scaled_and_offset.mp4

Now the overlay.

ffmpeg -i camera1.mp4 -i camera2_scaled_and_offset.mp4 -filter_complex "[0:v][1:v] overlay=0:504" PiPOverlay.mp4

Takes the inputs "camera1.mp4" and "camera2_scaled_and_offset.mp4" and using a complex filter(more on that later) overlays video index 1 [1:v] on top of video index 0 [0:v] at the pixel location of 0,504. Putting it in the bottom left corner.

There was some editing/cutting of the footage next.

ffmpeg -i PiPOverlay.mp4 -t 00:29:30 -acodec copy -vcodec copy part1.mp4

This creates a new video "part1.mp4" that contains only the first 29 mins 30 seconds.

ffmpeg -i PiPOverlay.mp4 -ss 00:31:30 -to 00:33:18 -acodec copy -vcodec copy part2.mp4

This creates a new video "part2.mp4" that contains only the frames from 31:30 to 33:18.

Then to combine them you need a text file listing the components in order.

find . -iname "part*.mp4" -printf "file '%f'\n" | sort -n > part.list

ffmpeg -f concat -i part.list -c copy video_parts_combined.mp4

The find command prints out the file names with a "file " prefix and sorts them numerically(computers like to order things alphabetically) and the output is stored in "part.list".

The "concat" command of FFmpeg then concatenates them together.

Next I did a nice information card in

Blender matching the resolution of my target video. Low number of samples, orthographic camera, all Emission materials. Then I parented all the components and animated them with a bounce. Rendered that as a transparent PNG sequence.

Found a lot of examples for FFmpeg that did not quite match my requirement or that had lots of extra parameters that were not needed for what I wanted. This was the result, after a lot of trail and error.

STARTSEC=3

MIDSEC=$((60*17))

ENDSEC=$((60*31))

ffmpeg -i video_parts_combined.mp4 \

-itsoffset $STARTSEC -i /tmp/%04d.png \

-itsoffset $MIDSEC -i /tmp/%04d.png \

-itsoffset $ENDSEC -i /tmp/%04d.png \

-filter_complex "[0:v][1:v]overlay [temp0]; \

[temp0][2:v]overlay [temp1]; \

[temp1][3:v]overlay [out]" -map [out] finished.mp4

First I created three variables for easy alterations. One for each of the areas that the info card should appear; at 3 seconds and then at 17 minutes and 31 minutes. Then I created four inputs for FFmpeg; the base video and the image sequence three times each with the different start offsets.

Now we get into filter_complex territory; video index 1 [1:v] is overlaid on video index o [0:v], but assigned to [temp0] then [temp0] is overlaid with video index 2 [2:v] and assigned to [temp1]. This is repeated to overlay video index 3 [3:v] onto [temp1], resulting in [out].

The "-map" takes the [out] and creates the final "finished.mp4" video file.

Caution: I was not interested in audio in any of this process, so reuse at your own risk.