Originally there was the JSON manipulation to track the bike riders , then coercing the Have I Been Pwned data . Now I have a taste for jq I need to use it everywhere \me laughs maniacally.

The Gentoo package system Portage tracks all the installs and how long they each took, amongst other things. As some specific packages take a long time to install, I really wanted to be alerted when that was going to happen. I am looking at you, Firefox .

Firefox takes about 45 minutes to install. I am not complaining. The time is simply the fact that the application is constructed from lots of well defined components, each needing compilation. Lots

There are are also a couple of other packages that I have noticed can be put in the * not quick * bucket. So what if I could take the log data and parse it through jq to get a list of the heavy hitters?

Accessing the raw emerge.log is not a good idea. Especially as a number of utilities exist to interpret the data dump. These include the incredibly quick qlop

qlop -Html

Will produce a nice list of all the L ast M erged packages with the T imes, all in a H uman readable format

Then just to spoil that serendipitous parameter arrangement, I want all merges not just the latest.

qlop -Htm

results in (cut for brevity)

2024-07-26T00:47:00 >>> virtual/rust: 7 seconds

2024-07-26T00:47:07 >>> app-misc/fastfetch: 20 seconds

2024-07-26T00:47:27 >>> x11-apps/appres: 11 seconds

2024-07-26T00:47:38 >>> dev-cpp/abseil-cpp: 31 seconds

As you can see, most things take no time at all to install.

To find the offenders, let's only look at those that take minutes, more than 9 and clean it up a tad

qlop -Htm | grep minutes | sed -re "s/.+>>>\s//" -e "s/(\S+):\s([0-9]{2}[^,]+).+/\2 \1/"

gives (cut for brevity)

13 minutes media-gfx/prusaslicer

17 minutes media-gfx/prusaslicer

11 minutes media-gfx/openvdb

16 minutes media-gfx/prusaslicer

But those duplicates are not very helpful. Need to convert this raw text into JSON.

qlop -Htm | grep minutes | sed -re "s/.+>>>\s//" -e "s/(\S+):\s([0-9]{2}[^,]+).+/\2 \1/" | sed -re "s/^(\S+)\s\S+\s(.+)/{\"mins\":\1, \"name\":\"\2\"}/"

Results in something that is NOT technically JSON, but jq can handle just fine.

{"mins":13, "name":"media-gfx/prusaslicer"}

{"mins":17, "name":"media-gfx/prusaslicer"}

{"mins":11, "name":"media-gfx/openvdb"}

{"mins":16, "name":"media-gfx/prusaslicer"}

jq has a specific option for slurping up objects in this format. Alternately I could have added a comma to the end of each line and wrapped the results in square brackets...

Now I need group the names together and find the maximum number of minutes for each.

group_by(.name) | map({name: .[0].name, minutes: map(.mins) | max})

try it at jqplay.org

Grouping by the name and then selecting max imum.

Then we can get fancy and get the average rather than the max

group_by(.name) | map({name: .[0].name, minutes: (map(.mins) | add /length ) })

try it at jqplay.org

Finally, lets put it all together and get the top ten offenders (on average)

group_by(.name) | map({name: .[0].name, minutes: (map(.mins) | add / length) }) | sort_by(.minutes) | reverse | limit(10; .[])

try it at jqplay.org (selects top 1 of 2)

this is the short complete command line

qlop -Htm | grep minutes | sed -re "s/.+>>>\s//" -e "s/(\S+):\s([0-9]{2}[^,]+).+/\2 \1/" | sed -re "s/^(\S+)\s\S+\s(.+)/{\"mins\":\1, \"name\":\"\2\"}/" | jq -s "group_by(.name) | map({name: .[0].name, minutes: (map(.mins) | add / length) }) | sort_by(.minutes) | reverse | limit(10; .[])"

resulting in (cut for brevity)

{

"name": "net-libs/webkit-gtk",

"minutes": 51.44444444444444

}

{

"name": "dev-lang/rust",

"minutes": 42.57142857142857

}

{

"name": "sys-devel/gcc",

"minutes": 42.370370370370374

}

{

"name": "www-client/firefox",

"minutes": 37.79710144927536

}

And I have my culprits!

Last post I explained the how and the why, this time we are going to get a lot more technical.

I had my list of breached email addresses and the name of the breaches they had come from. Unfortunately there was no date information. Which account had been breached last?

curl "https://haveibeenpwned.com/api/v3/breaches" -o /tmp/breaches-2024-07-14.json

Saved a new JSON file with all the breaches to my tmp folder.

All I needed to do was match up the breach data with the account data. There were a number of options. From eyeballing it, parsing it line by line, loading it into a SQLite database to dynamically grouping the data on the command line. No prizes for guessing which one I choose.

Here is a very simplified JSON file containing email accounts and which breach they have been detected in.

{

"Breaches": {

"email1": [

"OnlinerSpambot",

"Dropbox"

],

"email2": [

"OnlinerSpambot"

],

"email3": [

"Dropbox"

]

}

}

Here email1 is detected in to two breaches and email2 and email3 are each in only one.

Running

jq '.' accounts.json

will just output the contents as a new JSON object. Great way to make sure the data is valid.

The first job is to pull this data apart, ready to be merged with the breach details.

jq '.Breaches | to_entries | map_values({key:.value} + { email: .key }) | map({key:.key[], email:.email})' accounts.json

try it at jqplay.org

Breaks down to.

This gives us

[

{

"key": "OnlinerSpambot",

"email": "email1"

},

{

"key": "Dropbox",

"email": "email1"

},

{

"key": "OnlinerSpambot",

"email": "email2"

},

{

"key": "Dropbox",

"email": "email3"

}

]

a nice neat array containing one entry per email account per breach instance.

jq 'JOIN(INDEX(.Sources[]; .Name); .Breaches[]; .key) | add' /tmp/output_from_previous.json

using the output from the previous command and adding Sources for the breach details.

{

"Breaches": [

{

"key": "OnlinerSpambot",

"email": "email1"

},

{

"key": "Dropbox",

"email": "email1"

},

{

"key": "OnlinerSpambot",

"email": "email2"

},

{

"key": "Dropbox",

"email": "email3"

}

],

"Sources": [

{

"Name": "OnlinerSpambot",

"Domain": "www.OnlinerSpambot.com",

"BreachDate": "2005-01-01",

"AddedDate": "2005-10-26T23:35:45Z",

"ModifiedDate": "2010-12-10T21:44:27Z"

},

{

"Name": "Dropbox",

"Domain": "www.Dropbox.com",

"BreachDate": "2025-03-01",

"AddedDate": "2025-10-26T23:35:45Z",

"ModifiedDate": "2027-12-10T21:44:27Z"

},

{

"Name": "000webhost",

"Domain": "000webhost.com",

"BreachDate": "2015-03-01",

"AddedDate": "2015-10-26T23:35:45Z",

"ModifiedDate": "2017-12-10T21:44:27Z"

}

]

}

try it at jqplay.org

This breaks down to

This gives us the combined data

{

"key": "OnlinerSpambot",

"email": "email1",

"Name": "OnlinerSpambot",

"Domain": "www.OnlinerSpambot.com",

"BreachDate": "2005-01-01",

"AddedDate": "2005-10-26T23:35:45Z",

"ModifiedDate": "2010-12-10T21:44:27Z"

}

{

"key": "Dropbox",

"email": "email1",

"Name": "Dropbox",

"Domain": "www.Dropbox.com",

"BreachDate": "2025-03-01",

"AddedDate": "2025-10-26T23:35:45Z",

"ModifiedDate": "2027-12-10T21:44:27Z"

}

{

"key": "OnlinerSpambot",

"email": "email2",

"Name": "OnlinerSpambot",

"Domain": "www.OnlinerSpambot.com",

"BreachDate": "2005-01-01",

"AddedDate": "2005-10-26T23:35:45Z",

"ModifiedDate": "2010-12-10T21:44:27Z"

}

{

"key": "Dropbox",

"email": "email3",

"Name": "Dropbox",

"Domain": "www.Dropbox.com",

"BreachDate": "2025-03-01",

"AddedDate": "2025-10-26T23:35:45Z",

"ModifiedDate": "2027-12-10T21:44:27Z"

}

Now we just need to sort it by the AddedDate .

(using the same data as the last command)

jq '[JOIN(INDEX(.Sources[]; .Name); .Breaches[]; .key) | add] | sort_by(.AddedDate,.email)' /tmp/data.json

try it at jqplay.org

(Note that the JOINed data is wrapped in square brackets to create an array before sorting.

Now I can clearly see the email account that was breached most recently!

FYI the final command list with the real data was

jq '.Breaches | to_entries | map_values({key:.value} + { email: .key }) | map({key:.key[], email:.email})' /tmp/mybreaches.json > /tmp/mybreaches_mapped.json

jq '[JOIN(INDEX(input.[]; .Name); .[]; .key) | add] | sort_by(.AddedDate,.email)' /tmp/mybreaches_mapped.json /tmp/breach_details-2024-07-14.json

Which I am sure can be reduced further, but that works for me :D

I have been using Have I Been Pwned (HIBP) for many many years.

If there had been a new breach and my domain was in the email addresses identified, the service would send me an email. The email would simply link to the site and I would perform a new domain search. A nice JSON file would be produced listing all the address in all the breaches that HIBP knew about.

Then, not so long ago I got an email stating that an address with my domain had been in breach, but the domain search would no longer work. I have too many email addresses at the domain and must be a business.

This was slightly upsetting. I am not a business and anyone glancing at the myriad of email address could have clearly seen that. I don't like signing up for things, but this was different. The message was clearly indicating that I need to find out what breach contained what new email address!!

Troy Hunt to the rescue! I brought up the issue in his live stream for a weekly YouTube video and he referred me to his wife. She then arranged for me to get a 50% code. The service is really important and I had heard the news about charging companies in the past. I want to support HIBP so I paid.

As before I got a nice fat JSON file with all the details. I just had to use the API key that my new account generated.

I could have manually passed this file with my human eyes, but where is the fun in that?

Stay tuned for a deep delve in to JSON command line hacking!

An incident at work lead to the tale of The little Modal . I was also tempted to create a full blown children's book, with illustrations and page layout, but decided that might be going a little too far.

Sometimes these things just need to be written down to get them out of your head :)

And, no, it wasn't written by AI, but I am sure this page will be scraped at some point and then will be absorbed into the infamous pile .

Once upon a time there was a Modal .

This Modal had an Apply button and for many years people clicked on the button just once.

When they clicked, the Modal would hide and run the expected process.

Then one day a user double clicked on the button and the Modal ran the expected process twice.

The Modal thought they were doing a good job, but was told off for doing things twice.

The bad Modal investigated " Debouncing " and it seemed like a good thing.

But debouncing did not stop users from clicking lots of times.

It sort of worked, as the job of " Debouncing " is to wait until the user stopped clicking.

But how to know when a user had stopped clicking and what if they started clicking again?

So the naughty Modal investigated " Throttling " to make sure a user could only click a certain number of times per time period.

This also sort of worked.

What if the user was having issues that meant that the time period expired and then they clicked again?

The Modal didn't want to be called bad again and time could never be relied upon.

The sad little Modal decided that the very first thing that would happen when a user clicked the button was that the button would become a shade , so it could never ever be clicked again.

This worked perfectly and if the button was needed again the Modal would just recreate it.

Everybody liked this and the Modal was happy again.

The now happy Modal went to tell their friends that there was a simple and reusable code snippet that would make any of their buttons happy from users double clicking and if they really needed it, there was also snippets for " Debouncing " and " Throttling ".

The End

Now it is time for another quick (read: long) 3D printing project.

The house I moved into was missing the little triangular key mechanism for the Gas meter box outside the front door. The previous occupants had used tape to keep it shut by the looks of the residue. On the whole, it didn't bother me, but every now and then, when the wind really picked up, the door would become conspicuously open.

Gas meter door sans locking mechanism.

Gas meter inside the door

Gas meter cabinet latch panel

I could have looked up a replacement lock and printed it, but where is the fun in that?

The locking original purpose is pretty moot. All I needed was for it to not blow open during a storm. I wanted a handle!

The best thing about 3D printing is the iterative nature. So I started with just trying to get the core the right size. Using digital calipers definitely helped and I probably should have done more measuring, but ...

Then I used those same core cylinders and crafted the handle. Finally a back plate that would lock behind the cabinet latch.

First iteration of parts printed

But things didn't quite work out. The Handle broke when I tried to force fit the back plate. Though, it was only printed at 15% fill and not expected to be very strong. I also tried adding a slot in the back plate to allow a small piece of metal to weigh it down when closed. The idea of having the handle horizontal when closed was eventually abandoned.

Second iteration of parts printed and handle broken

A number of iterations later and I had added a nifty clockwise arrow to the handle. But that just made it stupid when I found the size of the handle didn't support rotating that way. The back plate's length was also catching on the side of the cabinet on the inside.

Handle catches on side of cabinet

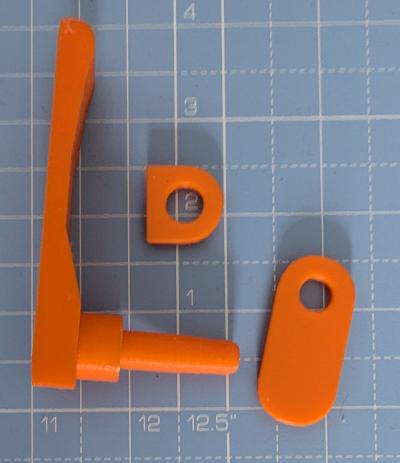

The designs had changed somewhat by now. The back plate had become considerably smaller and rounded. A new component is included that will stop the handle rotating too far. Also, the arrow symbol has been mirrored to point counter-clockwise.

Final iteration of components

It was just a matter of fitting it. I used a tiny bit of glue to bond the turning plate to the handle and the back plate to the handle.

Does it work? Oh yes. Took quite a bit longer than I intended, but that is another just job ticked off.

Erm, so I really should have measured two or three times before starting. At least all those spiders are safe.

I am not going to publish these designs. Firstly, they are not that great and secondly, I have just googled 3D printable parts and there are at least two different replacement latch models you can download. They require additional screws or a retaining ring.

I have completed Moss Book II thanks to my VR thumbstick cleaning efforts.

I found all the Energy pick ups and I found all the Scrolls. Though I needed help finding one elusive scroll that was down a hole that I didn't think was navigable.

As well as scroll-holes Moss Book II has expansive environments e.g. a castle in the sky. Vertigo was an issue in places, but thankfully was limited.

and this screenshot just sums up some of the interesting level design.

As I am a pheasant peasant with a 64Gb Quest 2, I immediately had to delete Moss Book II on completion to make room for other experiences.

email

root

flog archives

Disclaimer:

This page is by me for me, if you are not me then please be aware of the following

I am not responsible for anything that works or does not work including files and pages made available at www.jumpstation.co.uk

I am also not responsible for any information(or what you or others do with it) available at www.jumpstation.co.uk

In fact I'm not responsible for anything ever, so there!